|

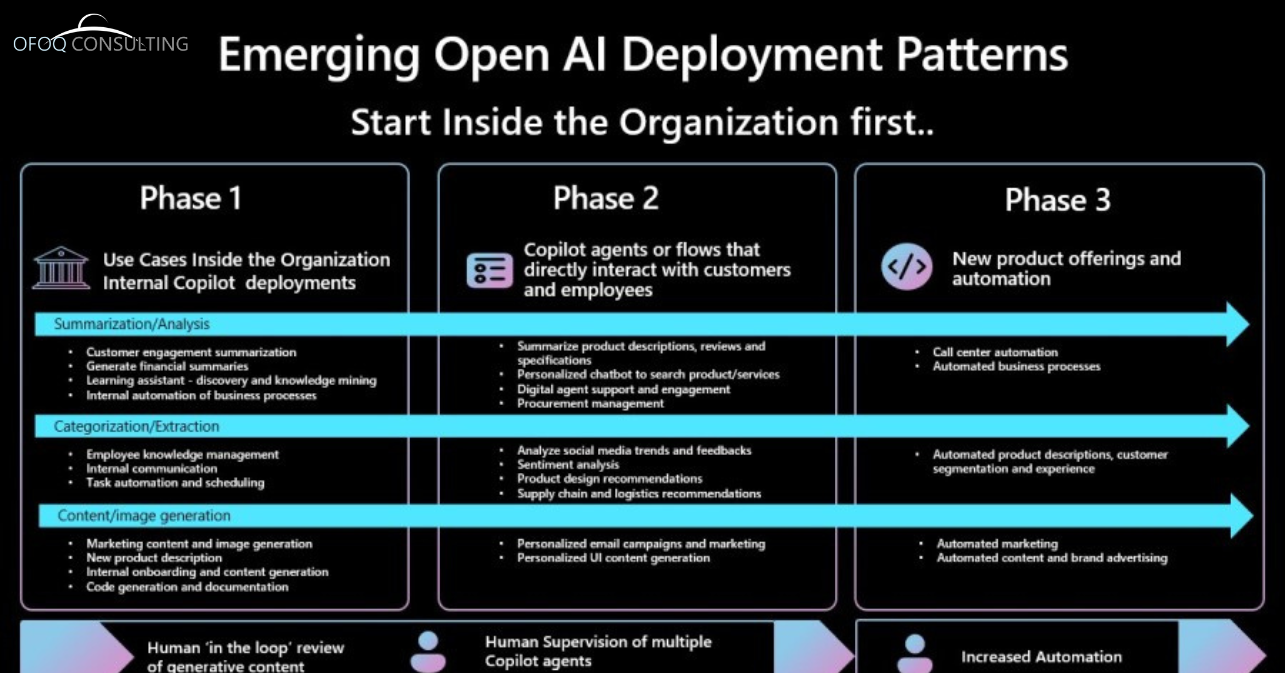

Generative AI: From Obscurity to Business Driver The concept of generative AI was virtually unknown just a year ago. However, the launch of OpenAI's ChatGPT changed the game. With over 100 million users within a staggering 64 days, ChatGPT sparked a major shift in conversations. Business teams and their IT counterparts went from "what is it?" to exploring how to leverage this technology. The impact wasn't limited to internal discussions at Microsoft. Customer-facing teams also saw a surge in interest, with generative AI becoming a prominent topic in conversations and major projects. This initial excitement has evolved. Microsoft, through its customer interactions, has identified valuable lessons to help businesses maximize the return on investment (ROI) from generative AI. The article delves into four key areas to consider when exploring this technology within your organization. 1. Define Your Problem, Not Just Play with the Tech Don't get caught up in the novelty of generative AI. Resist the urge to simply experiment without a clear purpose. Instead, focus on the specific business problems you're trying to solve. Clearly define what "success" looks like for these problems. This will help you "paint the vision" and guide your use of generative AI. Identify and Prioritize Use Cases Start by brainstorming potential applications, or use cases, where generative AI could be beneficial. Evaluating these use cases more closely might reveal alternative solutions for some. You might also find that certain use cases share similarities, allowing you to group them for a more efficient approach. Prioritization is Key: Impact vs. Effort A crucial step is prioritizing which use cases to tackle first. Consider the potential business impact of each use case against the effort required to implement it. This helps you focus on areas that deliver the most value for your investment. Microsoft uses a simple yet effective method: an "Impact vs. Effort" matrix. Each potential use case is placed on the matrix based on its estimated effort (low or high) and its anticipated business impact (low or high). This visual approach helps prioritize projects effectively. Prioritization is Key: Impact vs. Effort A crucial step is prioritizing which use cases to tackle first. Consider the potential business impact of each use case against the effort required to implement it. This helps you focus on areas that deliver the most value for your investment. Microsoft uses a simple yet effective method: an "Impact vs. Effort" matrix. Each potential use case is placed on the matrix based on its estimated effort (low or high) and its anticipated business impact (low or high). This visual approach helps prioritize projects effectively. Assessing Impact and Effort When evaluating impact, consider factors beyond just immediate benefits. Think about how generative AI could lead to faster completion times, improved decision-making, and cost savings for your business. However, also consider the timeframe for realizing these benefits. Effort, on the other hand, refers to the time and money required to implement the use case. Be mindful of the different systems and personnel involved. This will help you realistically assess the resources needed to achieve the desired outcome. Defining Success with Measurable Goals Once you've identified your target use case(s), it's vital to define clear success metrics. What does "good" look like in this context? Establish quantifiable measures based on time, effort, or cost savings to determine the effectiveness of your generative AI implementation. The more specific and measurable your goals, the better you can track progress and gauge success. 2. Start Internally: Test and Refine Before Launch While it might seem counterintuitive for a customer-focused organization, starting with internal testing can be highly beneficial. Generative AI is a new technology, and piloting it within your company offers several advantages. Firstly, it allows you to test the technology and refine your approach in a controlled environment. Your own internal teams act as a "friendly audience" for experimentation. This helps identify potential challenges and refine your use of generative AI before deploying it to external customers. Secondly, internal testing lays the groundwork for a comprehensive generative AI roadmap. This roadmap can be structured using a "Crawl-Walk-Run" approach, with three distinct phases: Phase 1: Crawl - Building the Foundation The crawl phase focuses on internal use cases with "human-in-the-loop" reviews of generated content. This is a safe and controlled way to gain experience. Here are some potential use cases for retail and consumer goods companies:

Stage 2: Progression Scenarios involving direct engagement with staff and clientele At this stage, there could be oversight over numerous scenarios by humans, each aimed at furnishing employees or clients with insights for more sound decision-making. Examples in the retail and consumer goods domain might encompass:

Enhanced automation This concluding stage emphasizes novel product introductions or automation intended for direct interaction with your clients. In the context of retail or consumer goods, examples might involve:

3. Lead with Business-Oriented Solutions Focusing on use cases with an emphasis on their potential impact and required effort ensures that your efforts are concentrated on solving problems that offer tangible value to your organization. Visualizing your objectives and what you aim to accomplish is critical in this process. Continuous feedback from business units is crucial. As with any initiative where technology is a major component, the most effective projects are those where there is a strong partnership between the technological and business sides, both aligned towards addressing a specific organizational issue. For technology-driven projects, including those involving generative AI, it’s vital to consider the synergy between people, processes, and technology. Thus, it's essential to pay attention to each aspect. For instance, in your efforts related to specific use cases, it's important to: MICROSOFT RESPONSIBLE AI Discover our insights Identify the business processes linked to your use case. Comprehend what triggers these processes and understand the interactions with various systems and stakeholders. Clarify the roles of individuals involved in the process and recognize who will be affected by the successful implementation and utilization of your use case in the operational setting. Determine how involved individuals will engage with the process—clarify the timing and methods for notifying them about necessary interactions or approvals. This aspect gains importance when considering the principles of responsible AI, specifically accountability. Examine your use case through the lens of responsible AI. Utilizing tools such as the Microsoft Responsible Impact Assessment can streamline this aspect. Give thorough consideration to change management. Knowing the individuals impacted is key to facilitating this process. The novelty of generative AI and the extensive coverage it receives in the media mean there are sensitivities to navigate. Applying and respecting Microsoft’s responsible AI principles, including fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability, is beneficial in addressing these challenges. 4. Prepare Your Team for Rapid Adaptation The enthusiasm for generative AI's potential is well-founded—with McKinsey estimating that it could contribute $2.6 trillion to $4.4 trillion annually to the global economy. These figures are particularly noteworthy, especially when considering that the GDP of the United Kingdom stood at $3.1 trillion in 2021. Therefore, leveraging this technology for innovation is crucial. This requires fostering skill development within your organization to quickly implement use cases. Cultivating your team's skills and capabilities is essential in this process. There may be a temptation to adopt a 'fast follower' strategy, where you quickly emulate a competitor's generative AI-powered solution that benefits their customers, possibly improving upon it. This strategy is viable, but it demands that in-house skills and expertise be developed over time to effectively compete. Focusing on the use cases you have identified and are innovating upon is among the most effective methods to develop the necessary in-house expertise. ChatGPT's achievement of reaching 100 million users within just 64 days, establishing it as the quickest-growing consumer app in history, underscores the potential for generative AI to be the fastest-expanding technology segment ever witnessed. As the realm of possibilities and advantages continues to rapidly evolve, attentively considering these aspects will enable your organization to learn and effectively leverage the capabilities of generative AI. This strategic approach not only enhances your business's value but also positions you as a top ERP system provider or one of the leading ERP suppliers, adept at navigating the swiftly changing technological landscape.

0 Comments

Introducing Enhanced ESG Data and Reporting Features in Microsoft Cloud for Sustainability2/26/2024 Key Tools for CSRD Compliance Preparation

The evolution of Microsoft Cloud for Sustainability has been notable over the past year. With the anticipation of new Environmental, Social, and Governance (ESG) reporting regulations, such as those from the EU’s Corporate Sustainability Reporting Directive (CSRD), we're unveiling advanced features designed to aid organizations in gathering and managing ESG data more effectively. Microsoft Sustainability Manager, a component of Microsoft Cloud for Sustainability, is expanding to provide users with comprehensive insights into their environmental impact, covering aspects like carbon, water, and waste. These upgrades are tailored to help organizations develop an extensive ESG data estate and meet upcoming reporting mandates. Project ESG Lake: Accelerating Insight Acquisition In response to the increasing demands for adherence to ESG reporting standards and the growing appetite for generative AI-based solutions, entities globally are exploring innovative data application and analysis methods. The foundation for risk prediction and the management of sustainability issues lies in the integration of diverse data sources and services for a thorough analysis of sustainability, operational, and financial data. Organizations are reevaluating their data management strategies, embedding sustainability more deeply into their operations and business models. The initial step involves organizing the data estate—collecting, aggregating, standardizing, and analyzing data, and tracing it back to its origins to support auditing activities. We introduce Project ESG Lake, designed to enhance organizations' ability to manage and prepare their data for comprehensive analysis, propelling them toward their sustainability objectives. This solution provides an extensive ESG data model, including over 400 tables spanning various categories such as carbon, water, waste, social, governance, and biodiversity. It enables the creation of a centralized data estate, transforming data from across the organization into a standardized schema ready for advanced analytics and reporting. Empowering Organizations with Project ESG Lake Project ESG Lake enables the consolidation of ESG data from disparate systems, standardizing it according to the ESG data model for a centralized ESG data estate. Features include:

Preparing for regulatory compliance involves understanding compliance positions, tracking necessary actions, and documenting evidence for impending regulations. To facilitate this, targeted features and a series of prebuilt ESG reporting templates aligned with major ESG regulatory standards are being rolled out. Comprehensive Emissions Data Management Complete emissions impact accounting requires data collection and management for all emissions categories across operations and supply chains. The latest update to Microsoft Sustainability Manager introduces the final round of Scope 3 emissions calculation models, covering all 15 categories and enhancing organizations' ability to report comprehensively. Waste and Water Data Capabilities for Sustainability Progress New capabilities in waste and water data management are introduced to provide organizations with a unified view of their waste sustainability data and accelerate their water sustainability efforts. These include waste data ingestion, visualization, and sustainability disclosure in Microsoft Sustainability Manager, along with water revenue intensity calculation, water storage tracking, and goal-tracking for comprehensive sustainability management. Leveraging Microsoft Cloud for Accelerated Sustainability Efforts The Microsoft Cloud for Sustainability API now enables direct data lake export for Microsoft 365 and Azure emissions, streamlining analytics and reporting processes. This integration offers organizations the flexibility to connect their data with Microsoft and third-party BI tools, enhancing their sustainability journey. Engaging with the Sustainability Community and Exploring Partner Solutions Stay informed and engaged with the Microsoft Cloud for Sustainability community portal and explore partner solutions tailored to unique ESG data management needs on Microsoft AppSource, facilitating a more sustainable future. Walgreens, a leading pharmacy chain, has revolutionized its prescription processing across its extensive network of nearly 9,000 stores by harnessing the power of Azure resources. Annually, the company handles hundreds of millions of prescription dispenses, each accompanied by numerous data points. By leveraging Microsoft Azure Databricks, Azure Synapse Analytics, and additional Azure services, Walgreens has developed an intelligent data platform capable of swiftly analyzing prescription data to generate actionable insights in minutes. This innovation benefits not only pharmacists at the customer interface but also the operational and analytics teams company-wide, offering immediate access to detailed transactional data and insights.

Streamlining Prescription Processing with Azure Sashi Venkatesan, Director of Product Engineering for Pharmacy and Healthcare Data Product Line at Walgreens, highlights the transformative impact of Azure resources on data processing and insight generation. The advanced data engineering pipelines facilitate real-time data analysis, enabling pharmacists and technicians to access critical insights rapidly. Navigating the Prescription Dispense Ecosystem The complexity behind each prescription transaction involves numerous stakeholders, including doctors, pharmacists, insurers, and patients. Walgreens' scale, with about 818 million prescriptions filled during the 2020 fiscal year, underscores the need for a robust infrastructure to manage this intricate process efficiently. Towards a Future-Proof Infrastructure Walgreens' commitment to enhancing customer service and operational reliability led to a collaboration with Microsoft. The goal was to future-proof its infrastructure with a cloud-first strategy and modern AI capabilities, thus ensuring scalability and agility in processing meaningful data points and translating them into actionable insights. Empowering Pharmacists with Intelligent Data The move towards a unified, intelligent data platform aimed to simplify innovation and adaptability in response to changing customer behaviors. Luigi Guadagno, Vice President of Pharmacy and Healthcare Platform Technology at Walgreens, emphasizes the platform's role in supporting pharmacists with critical patient information, thereby enhancing healthcare outcomes. An Intelligent, Connected Data Platform The Information, Data, and Insights (IDI) platform, developed in partnership with Microsoft Consulting Services and Tata Consultancy Services (TCS), adopts an event-based microservices architecture. It efficiently processes key prescription and pharmacy inventory data, delivering real-time insights to pharmacists through user-friendly dashboards and data visualizations in Power BI. Achieving Operational Excellence with Azure The platform's capability to handle around 40,000 transactions per second during peak times, thanks to Azure Databricks' scalability, marks a significant improvement over the previous system. The integration of Azure Data Lake Storage, Azure SQL Database, and Azure Cosmos DB ensures that data from prescription dispenses is rapidly available to Walgreens' data warehouse, enhancing response times. Enhancing Patient Care and Engagement The advanced data engineering pipelines powered by Azure have fundamentally improved how pharmacists serve patients, offering clear and concise insights that enable better patient care. This technological advancement has also positively impacted customer experiences, highlighting the value of an agile technology platform in today’s evolving retail and healthcare landscape. Conclusion: A Model for Future Growth Walgreens' successful deployment of an Azure-powered data platform sets a precedent for leveraging ERP and Azure resources in the healthcare industry. It demonstrates the potential for technology to not only streamline operational processes but also significantly improve patient engagement and care outcomes. This collaboration between Walgreens, Microsoft, and TCS exemplifies the power of digital transformation in facilitating growth and enhancing service delivery in the healthcare sector.

Microsoft Cloud for Sustainability: Accelerate Your Eco-Friendly Progress with Advanced Features2/7/2024 Celebrating Earth Month with Innovative UpdatesAs Earth Month unfolds, in light of the latest recommendations from the Intergovernmental Panel on Climate Change (IPCC) to accelerate the journey towards net-zero emissions, we're excited to unveil the latest enhancements to the Microsoft Cloud for Sustainability. These updates bring forward superior analytics, including Scope 3 waste data, elevated data access, and management capabilities, enriched reporting, and personalized adjustments. These tools are designed to assist entities in achieving their ecological objectives more swiftly and efficiently, paving the way for enhanced ERP data management practices within the realm of sustainability.

Enrich Analytics with Customizable DimensionsOrganizations are now empowered to introduce personalized dimensions to data model entities within Microsoft Sustainability Manager, enhancing the precision of calculations and analyses. This allows for the correlation of activity or emissions data with additional contextual information, such as emissions per employee count, offering a refined perspective on ERP data management within environmental sustainability efforts. Following the ingestion, mapping, and computation of data with these custom dimensions, it becomes possible to generate detailed insights into manufacturing intensities across various domains. A visualization example includes a dashboard displaying laptop manufacturing emissions data, categorized by assembly line, model, and SKU, illustrating the integration of ERP data management with sustainability analytics. Propel Forward with Data Approval ManagementParticularly beneficial for well-established organizations that maintain rigorous data management workflows, the newly introduced data approval management feature facilitates the staging of data in a pending status. This allows for the storage of data without affecting the ongoing calculations, analyses, and reporting processes. Upon receiving approval from authorized personnel, this data is then seamlessly integrated into all relevant operations, marking a significant step forward in ERP data management and sustainability tracking. Access Microsoft 365 Emissions Data with EaseOrganizations can now effortlessly access emissions data related to their Microsoft 365 service usage, encompassing a range of applications including Exchange Online, Outlook, SharePoint, and more, via the Microsoft Cloud for Sustainability API. This API employs a third-party validated emissions calculation methodology, encompassing all three emissions scopes as outlined by the Greenhouse Gas Protocol, thereby enhancing ERP data management capabilities with respect to sustainability metrics. Streamline Disclosure Preparations with Reporting EnhancementsIn anticipation of emerging disclosure regulations, the Sustainability Manager now features customizable reporting capabilities. Organizations can craft reports incorporating various types of sustainability data, such as emissions alongside Scope 1, 2, and 3 activity data, significantly reducing the complexity and volume of reports needed for disclosure preparations. Custom Views for Emissions AnalysisThe allocations dashboard introduces a flexible method for organizations to monitor their emissions generation, allowing for assessments based on headcount, area, production type, or any defined method. This empowers entities to understand the impact of different internal metrics on their overall emissions, integrating ERP data management with sustainability efforts. Advanced Scope 2 Emissions TrackingImprovements in accounting for Scope 2 emissions are now available, thanks to an additional calculation methodology and enhanced insights and reporting features. These advancements facilitate comprehensive tracking and reporting of both market- and location-based Scope 2 emissions data, reinforcing the role of ERP data management in environmental sustainability. Simplify Scope 2 Energy Data IngestionThe Data Capture feature leverages AI Builder to streamline the ingestion process for purchased energy invoices. By training a custom optical character recognition model, organizations can efficiently process invoices as Scope 2 activity data, further blending ERP data management with sustainability initiatives. Import Data from Various Sources with Guided ExperienceEnhanced data processing capabilities allow for quicker and more efficient importation of data from multiple sources. With the introduction of Excel templates, a Power Query guided flow, and partner solutions, users have the flexibility to choose the most suitable method for their data ingestion needs, optimizing ERP data management processes for sustainability tracking. Enhancing Environmental Credit ProcessesThe Environmental Credit Service introduces new features to clarify and expedite the validation of environmental claims, promoting transparency and scalability in environmental market ecosystems. This service is a testament to the integration of ERP data management with sustainable market practices. Microsoft Cloud for Sustainability's Waste Data ModelThe newly developed waste data model offers a comprehensive view of waste management data, aiding organizations in their pursuit of waste reduction and the promotion of a circular economy. This innovation marks a significant advancement in combining ERP data management with sustainability efforts. As we continue to enhance our offerings, the Microsoft Cloud for Sustainability is poised to empower organizations in their journey towards a more sustainable future, integrating ERP data management with comprehensive environmental sustainability efforts. The retail sector in 2023 has been on a dynamic journey, navigating through economic uncertainties and evolving customer expectations. Despite these challenges, the industry has exhibited remarkable adaptability and innovation. A prime example of this is the adoption of next-generation AI. This advanced technology, along with other digital tools, has revolutionized retail operations, introducing a new era of efficiency and customer-focused strategies.

Understanding Next-Gen AI in Retail

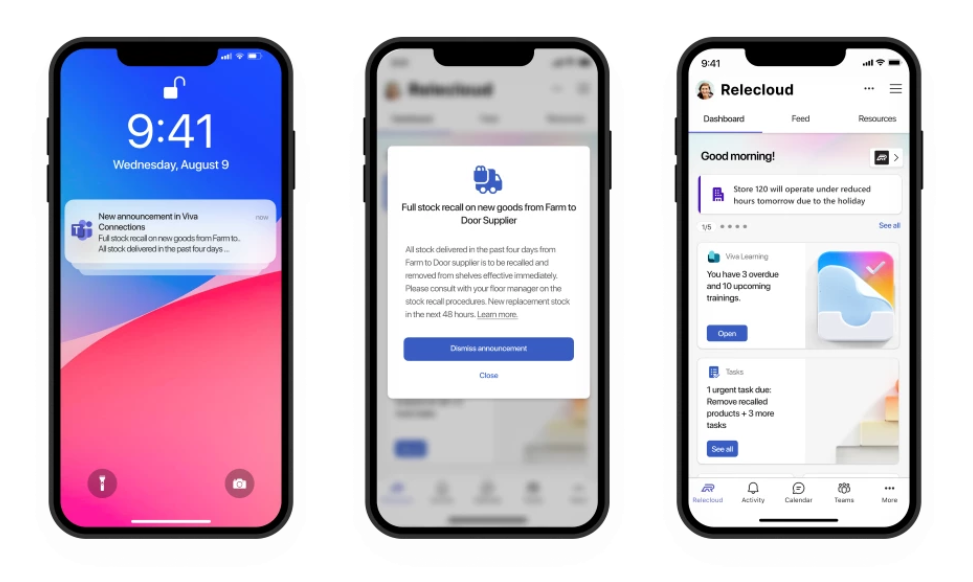

Our focus is on harnessing next-generation AI to enhance the capabilities of frontline workers. Read our detailed blog The synergy between innovative technology and our skilled retail workforce is reshaping our industry's core. We're embracing AI and advanced tools, uncovering new realms of productivity, efficiency, and customer focus. This approach, integrating elements of manufacturing resource planning, has optimized task management and automated workflows, allowing frontline teams to concentrate on exceptional customer service. Streamlining Frontline Operations with AI and Digital Tools Retail employees play a vital role in customer engagement and smooth store functioning. However, challenges like understaffed shifts and outdated systems can hinder their efficiency. Digital Transformation of Routine Store Processes Retailers are now using Store Operations Assist within Microsoft Teams, a move that exemplifies the integration of manufacturing resource planning into everyday retail operations. This tool helps store associates excel in customer service by providing access to sophisticated retail workflows and insights. Store managers can also leverage the new Shifts plug-in for Microsoft 365 Copilot, enhancing schedule management and operational insights. This integration brings manufacturing resource planning principles to the forefront, streamlining shift management and operational data access. Retail Evolution: Resilience Meets Innovation Retail stores are reimagining their operations with AI and digital tools, leading to more efficient and customer-centric environments. Brands like L’Oréal exemplify this digital transformation, enhancing frontline workers' roles through these tools. Revolutionizing Retail Communication with Modern Solutions Effective communication within the retail chain is vital. Microsoft Teams unifies this communication, fostering better collaboration and information flow. This evolution represents a shift from isolated store operations to a connected retail ecosystem, embracing elements of manufacturing resource planning. Central Planning, Regional Management, Local Execution Viva Connections within Teams enables targeted communications and notifications for store associates, streamlining operational messages and updates. Tailoring the Retail Home Experience in Teams To cater to the diverse needs of frontline employees in retail, Microsoft has introduced the ability to create multiple home experiences within Teams, reflecting each brand's unique identity. This feature aligns with the principles of manufacturing resource planning, offering a customized, brand-specific dashboard for frontline teams. Instant Communication with Retail Frontline Teams Communication is key, especially for larger and more dispersed frontline teams. Integrations with industry-standard devices enhance the ability to communicate effectively, crucial for manufacturing resource planning. Enhancing Frontline Engagement through Streamlined Communication AI capabilities are instrumental in task management and producing localized brand and marketing communications. Copilot in Microsoft Viva Engage offers personalized messaging suggestions, enhancing engagement and aligning with manufacturing resource planning strategies. Simplifying Trusted Frontline Experiences Simplifying processes is essential for retail organizations. By integrating technologies that automate routine tasks, retail companies can enable their frontline to focus on higher-value tasks, a principle central to manufacturing resource planning. Securing Customer Data with Microsoft 365 on Shared Devices Managing in-store devices is simplified with Microsoft Intune and Entra ID, ensuring secure and efficient sign-in experiences for frontline workers, an approach that complements manufacturing resource planning. Microsoft's Solutions for the Frontline Retail Industry The retail industry continues to demonstrate resilience and innovation. Microsoft is at the forefront, providing solutions that balance operational efficiency with exceptional customer and employee experiences. This holistic approach, integrating manufacturing resource planning principles, is setting a sustainable course for the industry's future." Improved Decision-Making Through Data Accuracy

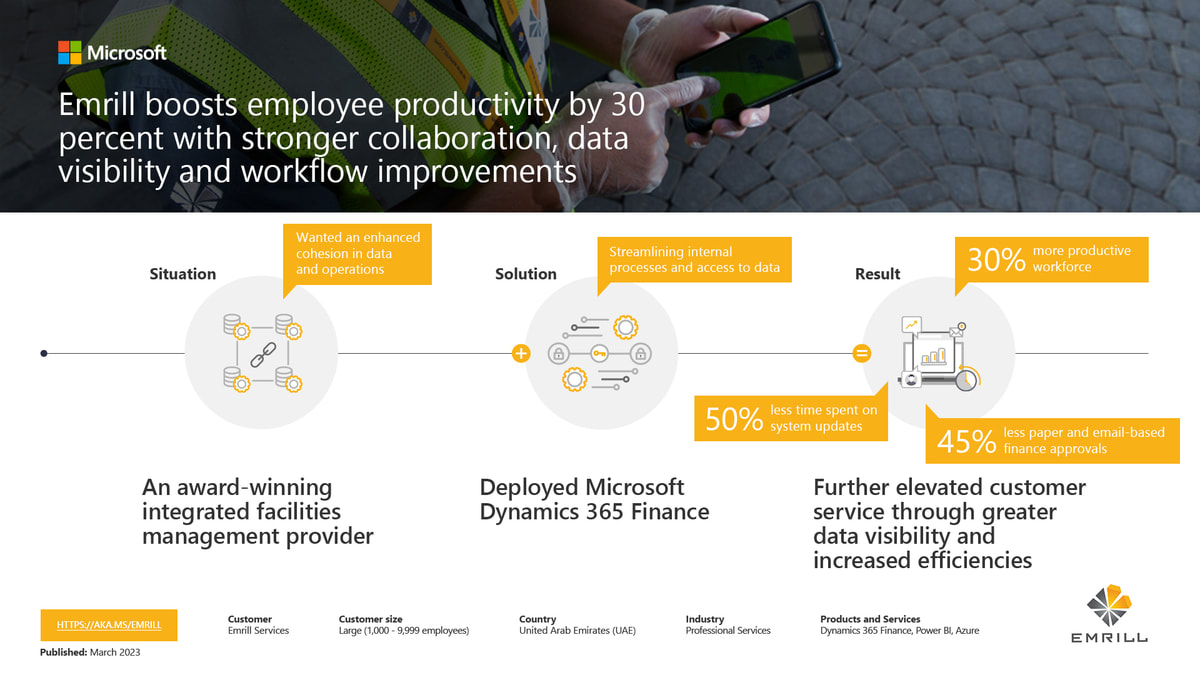

The adoption of the ERP solution significantly enhanced data visibility and accuracy, key elements for making informed decisions in the fast-paced facilities management industry. Enhanced reporting and dashboard capabilities led to greater confidence in decision-making, fostering trust and compliance during audits. The system also highlighted training needs within the team, facilitating targeted and effective skill development. Elevating Customer Experience to New Heights At the heart of Emrill's digital transformation was the goal of delivering a world-class customer experience. The streamlined processes, automation, and innovative approaches brought about by Microsoft Dynamics 365 Finance played a crucial role in achieving this goal. The enhanced efficiency and service quality significantly boosted customer satisfaction and set new standards in the industry. Conclusion Continuous Improvement and Future Endeavors Emrill's journey with Microsoft Dynamics 365 Finance is not just about present gains but also about future possibilities. The company is continuously exploring new avenues, like integrating the Walkie Talkie app in Microsoft Teams, to further streamline communication and operational efficiency. This ongoing commitment to improvement and innovation ensures that Emrill Services remains at the forefront of its industry, continuously delivering exemplary service. Partnering for Success The successful implementation and ongoing optimization of Microsoft Dynamics 365 Finance at Emrill Services is a testament to the power of strategic partnerships and the impact of embracing digital transformation. With the support and expertise of the Microsoft FastTrack team and Microsoft Lifecycle Services, Emrill has not only transformed its internal operations but has also set a benchmark for others in the industry to follow. |

AuthorWrite something about yourself. No need to be fancy, just an overview. Archives

March 2024

Categories |

|

Saudi Arabia

PO Box 12831 2357, Al Malaz - Zaid Ibn Thabit street, Venicia Building, Riyadh, Saudi Arabia. |

United States4008 Louetta Rd

Spring, Texas 77388 USA |

Home

|

Request a demo

|

RSS Feed

RSS Feed